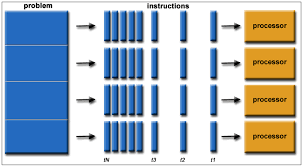

Parallel computing is a type of computation in which many calculations or processes are carry out simultaneously. Large problems can further be divided into smaller ones, which can further be solve at the same time.

There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.

As power consumption (and consequently heat generation) by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

In parallel computing, a computational task is typically broken down into several, often many, very similar sub-tasks that can be processed independently and whose results are combined afterwards, upon completion. In contrast, in concurrent computing, the various processes often do not address related tasks; when they do, as is typical in distributed computing, the separate tasks may have a varied nature and often require some inter-process communication during execution.

Is parallel computing difficult?

Parallelism is difficult. But it’s getting harder if the tasks are similar to each other and demand the same amount of attention, like calculating different sums. One person can only focus on calculating one of the two sums at a time. So real parallelism isn’t a natural way of thinking for us humans.

What are the issues in parallel computing?

- Amount of Parallelizable CPU-Bound Work.

- Task Granularity.

- Load Balancing.

- Memory Allocations and Garbage Collection.

- False Cache-Line Sharing.

- Locality Issues.

- Summary.

What are the primary reasons for using parallel computing?

There are two primary reasons for using parallel computing: Save time – wall clock time. Solve larger problems.

What are the applications parallel computing?

Notable applications for parallel processing (also known as parallel computing) include computational astrophysics, geo-processing (or seismic surveying), climate modeling, agriculture estimates, financial risk management, video color correction, computational fluid dynamics, medical imaging and drug discovery.

What is the biggest problem MIMD processor?

Two known disadvantages are: scalability beyond thirty-two processors is difficult, and the shared memory model is less flexible than the distributed memory model. There are many examples of shared memory (multiprocessors): UMA (uniform memory access), COMA (cache-only memory access).

Is cloud computing distributed computing?

Cloud Computing is built from distributed computing. Technically, if you have an application which syncs up information across several of your devices you are doing cloud computing and since it is using distributed computing.

How is parallel computing different from multiprocessing?

Parallel computing via message passing allows multiple computing devices to transmit data to each other. An exchange is organize between the parts of the computing complex that work simultaneously. Multiprocessing is the process of using two or more central processing units (CPUs) in one physical computer.

How many types of multiprocessor are there?

There are two types of multiprocessors, one is shared memory multiprocessor and another is distributed memory multiprocessor. In shared memory multiprocessors, all the CPUs shares the common memory but in a distributed memory multiprocessor, every CPU has its own private memory.

Why is a vector supercomputer so fast?

Vector processors operate on vectors—linear arrays of 64-bit floating-point numbers—to obtain results quickly. Compared with scalar code, vector codes could minimize pipelining hazards by as much as 90 percent. The Cray-1 was also the first computer to use transistor memory instead of high-latency magnetic core memory.