Decision tree learning is one of the most important and widely used and sensible strategies for

Classification.

Wide used Decision tree learning algorithms square measure ID3, ASSISTANT, and C4.5.

INTRODUCTION

Decision tree learning is a method for approximating discrete-valued target functions,

In which the learned function is represented by a decision tree.

Learned trees can also be represented as set of using if-then rules to improve human

Readability.

These learning methods are inductive inference algorithms and are being successful

Used in diagnosing medical cases to learning to assess credit risk of loan applicants.

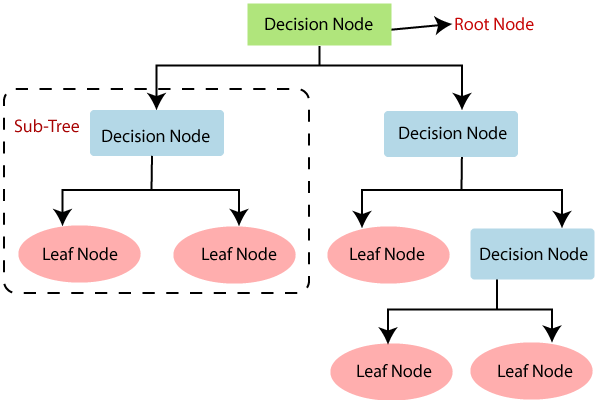

DECISION TREE REPRESENTATION

Decision trees classify instances by sorting them down the tree from the root to some

Leaf node, which provides the classification of the instance.

Each node in the tree specifies a test of some attribute of the instance, and each branch

Descending from that node corresponds to one of the possible values for this attribute.

An instance is classified by starting at the root node of the tree, testing the attribute

specified by this node, then moving down the tree branch corresponding to the value of

The attribute in the given example. This process id used again for the subtree rooted

At the new node.

Terminologies

Root Node (Top Node): It acts for the simple and this further gets divided. Into two or more homogeneous sets.

Splitting: It is a process of dividing. A node into two or more sub-nodes.

Decision Node: When a sub-node splits into further sub-nodes, then it called a decision node.

Leaf or Terminal Node: There is no Node with children are Called Leaf node.

Pruning (opposite of Splitting): When we reduce the size of decision trees by removing nodes the process is called pruning.

Branch or Sub-Tree: A subsection of the decision tree is called as a branch.

Parent and Child Node: A node, which is divided into sub-nodes is called a parent node sub-nodes

Whereas sub-nodes are the child of a parent node.